Psychological Entropy and the Hierarchical Mind

A chapter preview and update on "The Return of the Great Mother"

As some of you know, most of my time these days is being spent finishing The Return of the Great Mother, the book I’ve been working on for about seven years. Fourteen of the planned thirty-five chapters are now fully drafted, and progress is rapid. Because the ideas are already well-developed, writing each chapter has mostly been a smooth process.

Barring any major disruption, the full draft will be done by the new year, with release expected next summer.

Below is a lightly edited draft of Chapter 6, titled “Psychological Entropy and the Hierarchical Mind.” It presents a basic model of how the mind works, drawing primarily on predictive processing, relevance realization, and the psychological entropy framework. This chapter is from Part 1 of the book, which lays the scientific groundwork for the arguments that follow.

A quick caveat: The citations aren’t cleaned up yet. I’ve used mixed formats and omitted the bibliography for now. I’m also missing a lot of citations, most of which are included as comments in the software I use to write. That will all be addressed when I move to final formatting and reference management. Doing it now would just be redundant.

If you’re a paid subscriber, thank you. Your support is funding the writing of this book, and you’ll receive the finished version for free.

Here’s the current draft of Chapter 6. Feedback is welcome, especially if you think I’ve made a factual error somewhere.

Enjoy.

Chapter 6: Psychological Entropy & The Hierarchical Mind

For most of modern history, scientists believed that perception was a bottom-up process: the brain receives raw data from the senses, assembles that data into detailed representations of the outside world, and then uses those representations to decide how to act. We now know that this model of perception is wrong. Bottom-up sensory input does play a crucial role in perception, but it is woefully inadequate to explain the rich, detailed sensory world that we seem to occupy. This is because there is far too much sensory input available at any given time for you to take in all of it. Any attempt to create a detailed model of the world from sensory data alone is a computationally impossible task.

Instead, your brain and body does something much more efficient. Most of what you perceive at any given moment is not the result of bottom-up sensory input, but is rather a top-down prediction generated by your brain. In some important sense, your brain is a prediction machine, constantly generating top-down predictions about what you expect to perceive next. These predictions are shaped by evolved instincts (e.g., the modules discussed in Chapter 3), goals, past experiences, and context.

In this view of perception, referred to as predictive processing, most of what you actually process is the difference between your prediction of the world and the bottom-up sensory input. That difference is referred to as prediction error. Our perceptual system primarily processes prediction errors and ignores any input that does not produce an error. This means that we don’t necessarily see the world as it is. We see what we expect to see, modulating our expectations based on prediction errors so that we can constantly attempt to stay in touch with reality. Most of our perception is guesswork—fast, efficient, and usually good enough to keep us alive.

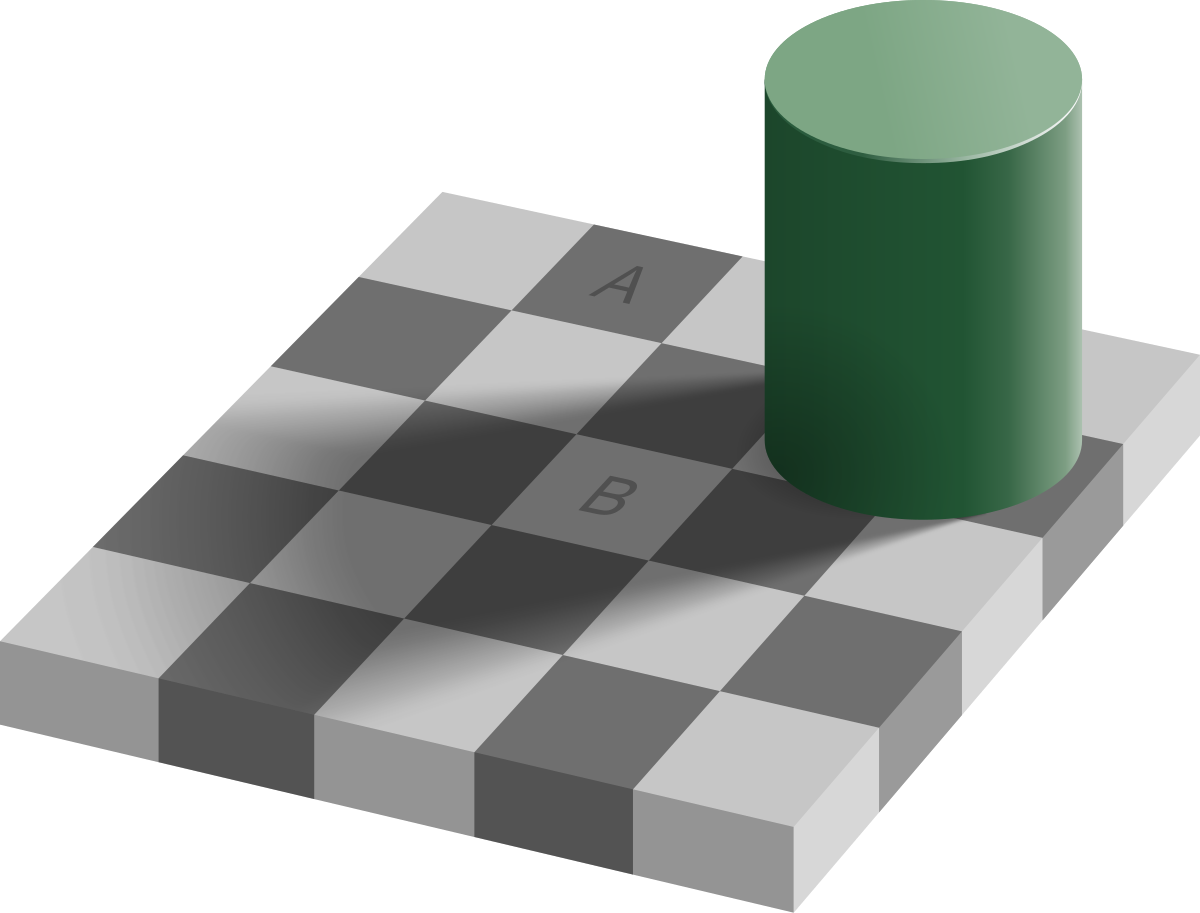

Consider this simple illusion:

Even when I know exactly what the illusion is, I can’t help but see the A and B squares as being different colors. They are, of course, the exact same shade of gray.

If perception was only determined by raw sensory input, we should see the same color when we look at these squares. Instead, we have an expectation that objects in shadow are being darkened. This expectation works entirely outside of our conscious awareness. Our perceptual system adjusts for this expectation by showing us a more useful interpretation of the checkerboard. B appears lighter than A because in the real world under these conditions, B would be a different, lighter color than A, if the shadow were to be removed. The brain’s top-down predictions—about lighting, material properties, and familiar patterns—actively reshape the visual experience. Your perception is of the object is an inference based on both prior knowledge (top-down prediction) and current sensory input.

This illusion vividly illustrates the principle that we don't see what’s actually there—we see what we expect to be there, given the context. The brain constructs perceptual reality by combining incoming data (bottom-up) with prior models (top-down). Here, the top-down model (how shadows affect appearance, what checkerboards look like) alters the interpretation of identical sensory input so that the same color now looks like two very different colors. The above illusion demonstrates that, in real-world conditions, expectation-mediated perception is far more useful than perceiving reality veridically.

Precision-Weighting & Attention

This predictive processing view of perception introduces a new problem. In a noisy, uncertain world, how much “weight” should we give to bottom-up sensory information and how much weight should we give to top-down predictions? For example, suppose you are driving on a curvy road. This is a road that you’ve driven many times before and so you know each curve almost by memory. Right now you’re driving at night-time, while it’s foggy outside. In this case, your bottom-up sensory input may not be very reliable, but you will have high confidence in your top-down predictions about the contours of the road. Under these circumstances, you’d give more weight to your top-down predictions than you normally would.

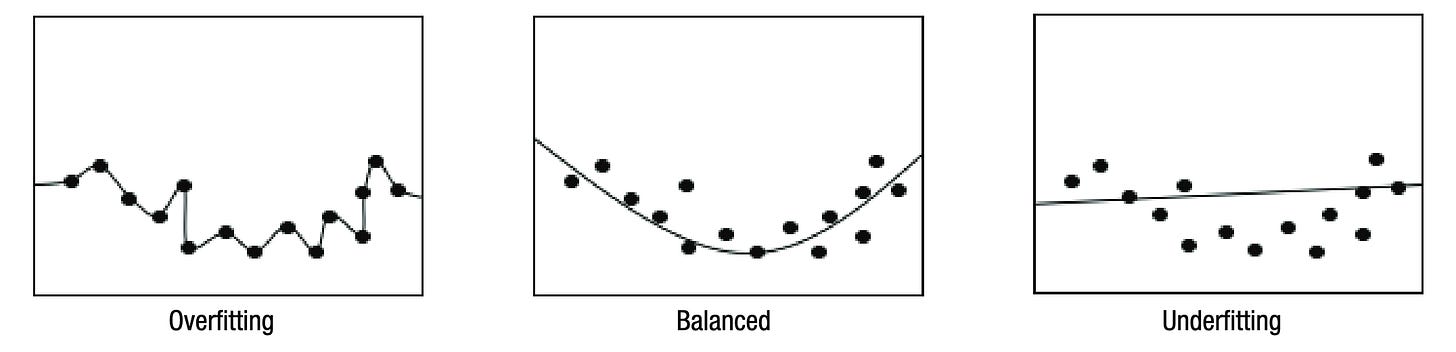

Under most realistic conditions, determining how much weight should be given to bottom-up input and top-down prediction is an extremely difficult task. If you give too much weight to bottom-up input, you’ll end up focusing on minute details, but missing out on the big picture. If you give too much weight to top-down predictions, you’ll end up hallucinating patterns that aren’t actually there. In statistics and machine learning, the prior mistake would be referred to as “overfitting”. By giving too much weight to details, the model becomes overfitted to the data and will not generalize to new datasets. The latter mistake would be called “underfitting”. By giving more weight to prior predictions, the model becomes underfitted to the data, meaning that top-down predictions will impose a pattern that doesn’t actually exist in the data.

The figure below demonstrates these two kinds of errors.

In this figure, there is a single noisy pattern — a curved line. By giving too much weight to each individual point, the model on the left picks up on noise as if it were meaningful, resulting in a pattern that’s overly complex and fails to generalize (overfitting). By trying to impose some prior assumption on to the data (e.g., that the pattern is a straight line), the model on the right “hallucinates” a pattern while the real pattern goes undetected (underfitting). I’m using the word hallucinate a little loosely here, as the term is normally used to describe perceiving something in the absence of any sensory input. But in a predictive framework, even seeing a straight line where a curved one actually resides can be understood as a kind of hallucination—an instance where the brain’s prior expectations override the incoming data and impose an incorrect pattern onto reality. In chapter X, we’ll see that each of us fall somewhere along this spectrum — either more prone to overfitting or underfitting our sensory data — and that there are tradeoffs associated with each side.

The process by which our perceptual systems assign “weight” to bottom-up sensory input or top-down prediction is referred to as precision-weighting. This term reflects the fact that we are assigning weight based on how “precise” the input or prediction is. In this context, the word “precise” can be thought of as an alternative for the word “confidence”. If we are confident in the veracity of a sensory input or top-down prediction, then it will be given more weight in perception. This should not be understood as a conscious process. The vast majority of this precision-weighting goes on beneath conscious awareness.

We’ll come back to precision-weighting later in this chapter and in the next two chapters.

The Hierarchical Mind

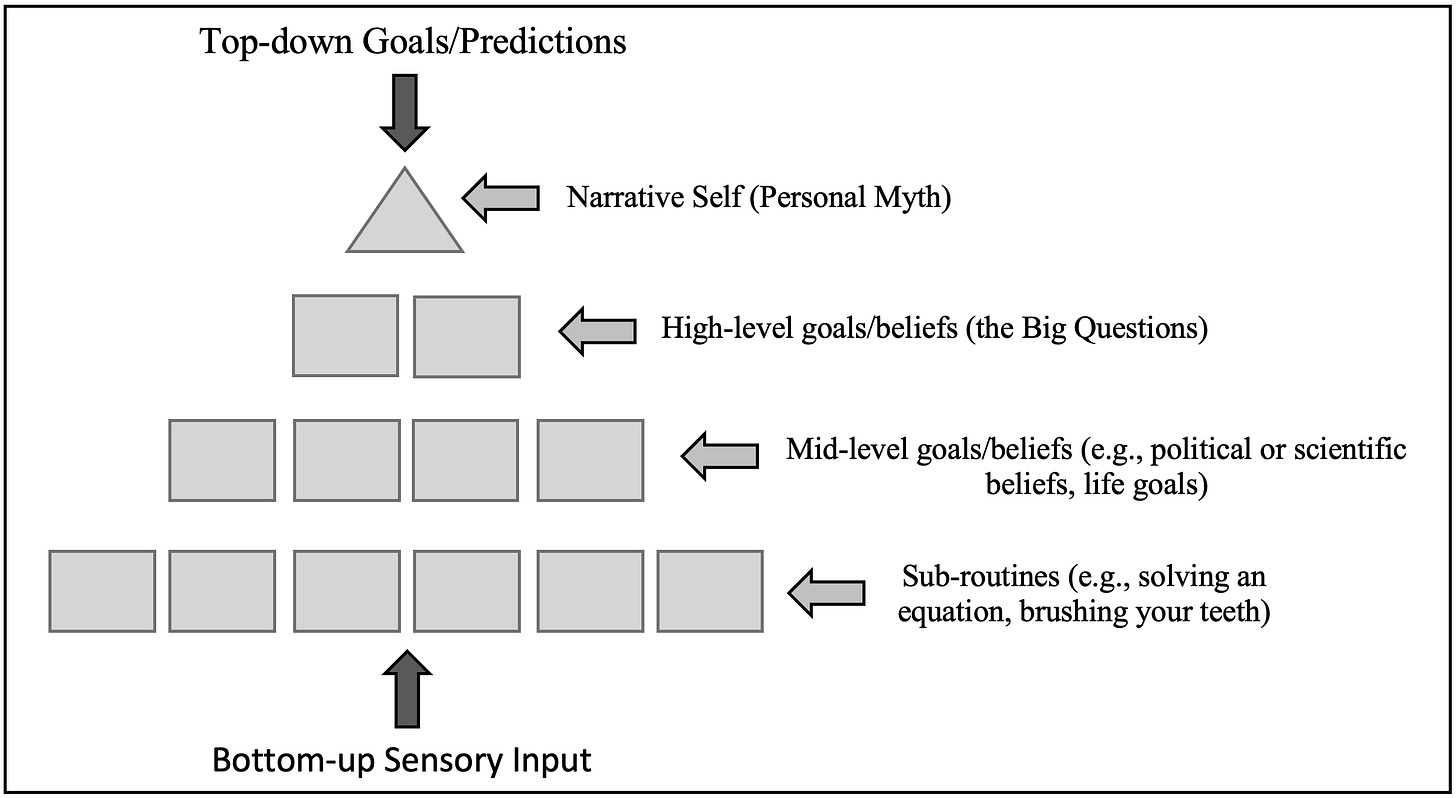

We tend to think of the mind as a single thing—a voice in the head, a point of view, a seat of consciousness. But of course, this view of the mind is biased by our own consciousness, which simply does not have access to the underlying processes that produce this perspective. Under that conscious surface, the mind is more like a hierarchical control system. The top of the hierarchy deals with highly generalizable and long-term predictions and aims: deep values, sense of identity, metaphysical assumptions, etc. The lowest levels of the hierarchy manage concrete, moment-to-moment tasks: fine motor movements, posture, tactile sensation, etc.

Each level operates as part of a nested feedback loop: higher layers generate broad, abstract predictions or goals, while lower layers implement those goals in context-sensitive detail. When the system detects a mismatch—between what it expects or desires and what it gets—it adjusts, attempting to minimize the discrepancy.

This structure has deep roots in cybernetics. All the way back in 1998, it was thoroughly explored by psychologists Carver and Scheier in their book On the Self-Regulation of Behavior. Drawing on the study of cybernetic (i.e., feedback-predicated) systems, they proposed that human behavior is guided by a cascade of self-regulating loops. Modern cognitive science now echoes this view, describing the brain as a hierarchical inference engine: a system that constantly predicts the world at multiple levels of abstraction and updates itself in response to error. To understand thought, feeling, and behavior, we must first understand this structure. The mind can be understood as a stack of predictions and goals, organized hierarchically by abstraction and time-scale.

Let’s make this more concrete: below is a simple model of the hierarchical mind with examples of the kinds of goals and predictions that would reside at each level.

At the top of the hierarchy, you see the term “narrative self”, which I will refer to as your personal myth. This is inspired by Hirsh, Mar, & Peterson’s 2013 commentary in which they argue that:

… the hierarchical predictive processing account… can be usefully integrated with narrative psychology by situating personal narratives at the top of an individual’s knowledge hierarchy. Narrative representations function as high-level generative models that direct our attention and structure our expectations about unfolding events. (p. 216)

What could they mean by this? In this context, your personal myth doesn’t refer to a detail-rich story about your life. The top of the hierarchy isn’t necessarily concerned with the time that you peed your pants in 3rd grade, even if this did have some profound effect on your life course. Rather, the top of the hierarchy is occupied by an aspirational narrative about the kind of person you aspire to be. What is it to be a “good person”? Must a good and successful person be rich? Powerful? Pious? Just?

The next level of the hierarchy is occupied by what Taves and colleagues (2018) referred to as “The Big Questions” that make up a worldview. They identify five types of questions that constitute a worldview:

Ontology: What exists? What is real?

Epistemology: How do we know what is true?

Axiology: What is the good that we should strive for?

Praxeology: What actions should we take?

Cosmology: Where do we come from and where are we going?

These questions are asked in the most general possible sense. Praxeology, for example, is not concerned with the actions we should take in order to brush our teeth properly, but the general actions we need to take in order to be a “good person” (however that may be defined). The big questions are necessarily the most abstract because they apply to all situations at all times. Everything a person comes into contact with may be considered as real or unreal, true or false, proper or improper, good or bad. Thus, whether our answers to the big questions are implicit (i.e., unconscious) or explicit, they necessarily reside at the highest levels of abstraction in the processing hierarchy.

All organisms must have answers to these questions, although outside of humans these answers (e.g., food is good, predators are bad) will usually be implicit rather than explicit (Taves et al., 2018). Throughout much of human history, explicit answers to the big questions have tended to take on a mythological or religious format, most often in the form of a narrative (Bouizegarene et al., 2020; Hirsh et al., 2013; Peterson, 1999; Peterson & Flanders, 2002). More recently, philosophy and science have attempted to provide answers to the big questions.

No matter what format these answers are in (mythological, philosophical, or scientific), people are highly motivated to protect the abstract beliefs and values that make up their worldviews (Brandt & Crawford, 2020; Goplen & Plant, 2015; Hirsh et al., 2012; Peterson & Flanders, 2002). We’ll explore why that’s the case in more detail further below.

Our personal myth, and our answers to the Big Questions, direct our attention and structure our expectations because every time you perceive a mismatch between them and reality, a prediction-error is produced. These prediction errors signal a mismatch—between who you aspire to be and who you’re currently being, or between what you believe the world is like and how it actually is. This mismatch produces anxiety or other negative emotions, directing your attention to whatever aspect of your life is lacking. Your response to that kind of error can take three basic forms:

1. You can ignore or re-interpret the error, maintaining your self-image and worldview in the process.

2. You can allow the error to reshape lower-level goals, beliefs, habits, etc., so that the error is less likely to be produced again in the future.

3. You can alter your high-level structure, changing your own narrative self-concept and worldview to accommodate whatever behavior produced the error.

Basically: Deny the error, change your behavior, or change your self.

These three forms are, in fact, the three kinds of responses that you can have in response to any prediction error, no matter what level of the hierarchy it occurs at. Whenever a prediction error is registered—whether in high-level goals or low-level perception—the system can (1) reinterpret or suppress the error, (2) change behavior to eliminate it, or (3) revise its higher-level expectations so that the signal is no longer experienced as an error at all.

But which of these is more likely to occur in response to an error? The short answer is: it depends. It depends primarily on what level of the hierarchy the error occurs at. To understand why, we will need to review Hirsh, Mar, & Peterson’s psychological entropy framework.

Psychological Entropy

While some scientists resist the idea of equating entropy with disorder, this is mostly just pedantry. Entropy does, in fact, track our intuitive notions of disorder quite well. Consider the following examples of entropy:

• Gas spreading out in a container? That’s an increase in entropy, and clearly the gas is becoming more disordered.

• Melting ice? Structure breaks down—yep, more disordered.

• Uncertainty in information theory? More possible messages = more disorder.

• Black hole entropy? You know almost nothing about its internal state—a maximal kind of information-theoretic disorder.

And so for our purposes, we can ignore mathematical definitions and just think of entropy as disorder, broadly understood. I will also sometimes use the terms chaos and entropy interchangeably. Again, certain kinds of scientists would balk at this usage. Chaos does, in fact, have a technical definition (see Chapter 1) that is different from disorder and entropy. But for the purposes of this book, unless otherwise stated, I am using these terms according to their common understanding rather than the technical definitions used in complexity science or elsewhere.

Enter Hirsh, Mar, & Peterson’s 2012 psychological entropy framework. Psychological entropy refers here to behavioral uncertainty. How confident are you about the correct course of action over the next 5 seconds, 5 minutes, or 5 years? High confidence indicates low entropy; low confidence indicates high entropy.

The amount of psychological entropy we are experiencing at any given time is usually felt as anxiety. When we are existentially uncertain about the direction of our life — frozen in place like a deer in the headlights — we feel anxious. When we are smoothly confident about our selves and our direction, we feel calm, cool, and collected.

An intuitive way to understand this concept is to think about different distributions of confidence in a set of behavioral options. Consider the following example: You’re a man who has been dating your girlfriend for three years. You love her, but the thought of making a life-long commitment to her is anxiety-provoking. What if you can’t be sexually exclusive for the rest of your life? What if she gains considerable weight or becomes mentally ill? Let’s be honest: most men do think about these things before marriage, even if they don’t say it out loud. In this situation, you’re not opposed to marriage in general, you’re just not sure if you’re willing to make the commitment yet. You’re also suspecting that she may leave you if you don’t make your mind up soon. Here are the options swimming through your mind:

1. Propose now.

2. Honestly promise her that a proposal is coming soon.

3. Dishonestly promise her that a proposal is coming soon (i.e., string her along).

4. Break up with her.

Let’s take a look at high-entropy and low-entropy psychological states in relation to these options. The percentages below represent your internal sense of how likely you are to choose each path—not a formal calculation, but an intuitive confidence profile. A maximum entropy state would look like this:

Option 1: 25% chance of making this decision.

Option 2: 25% chance of making this decision.

Option 3: 25% chance of making this decision.

Option 4: 25% chance of making this decision.

In other words, the state of highest entropy is the one in which all available options seem equally appealing. This is maximum uncertainty. You are incapable of taking decisive action because no behavioral option seems obviously correct. This is a decision that the person would be very anxious about.

A relatively low-entropy state would look like this:

Option 1: 97% chance of making this decision.

Option 2: 1% chance of making this decision.

Option 3: 1% chance of making this decision.

Option 4: 1% chance of making this decision.

At this point, barring some change in circumstances, you’re highly confident that this is the woman you want to marry and spend your life with. Low anxiety, high confidence.

The amount of anxiety produced by uncertainty largely depends on the level of the processing hierarchy at which the conflict occurs. Most people don’t feel particularly anxious even if they are maximally uncertain about what they’re going to have for breakfast. This kind of uncertainty is swiftly collapsed down to a decision: “I’ll just make some eggs, I guess.” No big deal.

Uncertainty at higher levels of the hierarchy is much more distressing. Major life events can trigger this kind of existential uncertainty. For example, if the man from the previous example married his sweetheart, then came home from work early 10 years later to find her in bed with his best friend, this would almost certainly trigger a massive cascade of psychological entropy:

Who am I? Am I the kind of person who gets fooled so easily? Am I the kind of person who gets betrayed, both by the love of my life and my most trusted confidante? Is there any such thing as love in this world? Should I ever trust anyone again? Should I just kill myself, and maybe them too?

This is psychological entropy at its worst—not just uncertainty about what to do, but uncertainty about how to be. The reason higher-level uncertainty like this is so disturbing is because these abstract models have many lower-level systems nested beneath them. If you don’t know what kind of person you are, or the kind of person you want to be, you also don’t know what you should be aiming for. Love? Money? Sex? Piety? Without some high-level belief structure, each of these may look equally appealing, or none may seem appealing at all.

That’s because when you lose confidence in high-level beliefs—like your identity, your purpose, or the possibility of love—you don’t just lose those beliefs. You destabilize everything that depends on them. The structure begins to unravel from the top down. Your psyche experiences a massive spike in entropy: too many competing interpretations, not enough certainty to act. The result is at least a large amount of anxiety, but can also include nervous breakdowns or even psychosis in the extreme.

It is for this reason that we jealously protect the beliefs and values at the highest levels of the hierarchy. People usually don’t become angry if you question their choice in breakfast food, but what if you question their religion, political views, marriage, career choice, or their values in general? This sort of challenge is very likely to provoke defensiveness, even if the challenge is proposed in good faith. To question a breakfast order is, at most, slightly annoying. To question a worldview is a threat to your mental and emotional stability, and many people don’t take that lightly.

Complexity Management

The necessity of protecting ourselves from chaos — psychological or otherwise — underlies our defensiveness about high-level beliefs and values. This was the subject of Peterson & Flander’s 2002 paper on complexity management.

The world is far too complex for our cognitive models of it to be literally accurate. Our predictive models necessarily simplify the world, flattening its chaos into a manageable frame of reference. This simplification isn’t a bug; it’s a feature. We could never function if we had to account for every variable in every moment. But this comes at a cost: our simplified models are always partially wrong. The world is too complex, too dynamic, and too full of unknowns for our simplified predictive models to map perfectly onto reality.

That means we’re always walking a tightrope. On one side lies rigidity: the refusal to revise one’s models, even in the face of error. On the other lies chaos: the continual erosion of belief in the name of openness. Both are dangerous. If you're too rigid, you become blind to new information, incapable of growth, locked into dogma. But if you’re too open—if you fail to defend your high-level beliefs—you risk falling into nihilism, or worse: becoming the kind of person who believes in everything, and therefore nothing. As Peterson and Flanders argue, the psyche must reduce and simplify the complexity of the world simply because that reduction is necessary to act at all. When the true complexity of the world comes rushing back in, we risk falling into anxiety, paralysis, or delusion.

In this light, the persecution of heretics by ideologues or religious zealots isn’t mere sadism — it’s a form of complexity management. The heretic is not an imagined threat. He or she is an actual threat to the shared cognitive maps that hold a civilization together. If a religious worldview is threatened within a highly cohesive or conformist society, it really does threaten the stability of both individuals and the society at large.

Soldiers fight holy wars, but what if that holiness is brought into question? Cathedrals are built for a loving and almighty God, but what if that God doesn’t exist? Kings rule by divine right, but what if that divinity is an illusion? Questioning the deeply held assumptions of a culture is not just about belief. It is, rather, a threat to all of the institutions that depend on that belief. Those institutions are the glue holding civilization together, and when the belief system collapses, so do they. This is in addition to the fact that successfully challenging someone’s high-level beliefs (their personal myth and answers to the Big Questions) is likely to generate an outsized amount of psychological entropy. The threat is both sociological and psychological.

Make no mistake — the heretic, while potentially necessary and truthful, is a true representative of chaos. And the defenders of the current order will always persecute him because they implicitly understand the threat that he represents.

Relevance Realization

We’ve been discussing the consequences of prediction error — how we respond when our predictive models fail. When there’s mismatch between our predictions and what we actually encounter, we can ignore the error, revise our behavior, or revise our beliefs.

It’s clear that most prediction errors are simply ignored. There are always slight deviations between what we expect to perceive and what we actually perceive, but most of these slight errors are irrelevant to us. Think back to our discussion of overfitting and underfitting at the beginning of this chapter.

If we actually paid attention to most or all prediction errors, our cognitive models would end up behaving like the model on the left. They would be too precise — accurately tracking irrelevant detail at the expense of generalization. In other words, we wouldn’t be able to see the forest because we’d be too intent on perceiving every minute detail of a single tree. On the other hand, if we go too far in the other direction, we would miss out on too many relevant details.

How do we actually handle this tradeoff? In predictive processing, precision-weighting is the process by which we intelligently ignore irrelevant prediction errors — the more “precise” we deem a sensory input to be, the more impact it has on updating our perceptual system.

But this poses a problem: Precision-weighting is a relatively underdeveloped concept within predictive processing. It’s clear what it is — the assignment of weight to predictions or bottom-up input based on precision/confidence — but it’s not always clear how this process works underneath the hood. The relevance realization framework, articulated and popularized by cognitive scientist (and my former collaborator) John Vervaeke, helps to fill these gaps.

Relevance realization provides a framework for understanding how a system like the brain can dynamically regulate what it treats as important—how it filters signal from noise, without relying on a fixed rulebook or infinite computational power. A full explanation of relevance realization is unnecessary for our purposes. Instead, we just need to understand that relevance realization consists of:

1. Competing interactions (opponent-processing relationships), leading to

2. Self-organized criticality (a cascade event, often in the form of an insight), leading to

3. Complexification (i.e., differentiation and integration) of cognition over the course of development.

Let’s explore step 1 first. Instead of relying on a single master algorithm, relevance realization suggests that our perceptual system engages in what’s called opponent processing: the continuous balancing of opposing pressures or demands. This is, in fact, a common strategy in biological systems.

Consider the sympathetic and parasympathetic nervous systems. These two branches of the autonomic nervous system operate in direct opposition: the sympathetic system prepares the body for action—accelerating heart rate, increasing alertness, mobilizing energy, fight-or-flight—while the parasympathetic system calms the body down, restoring balance and conserving resources. Neither system is inherently better than the other. Their value depends entirely on context. Sometimes we need to be in a state of high alert, and at others we need to be calm and reflective. The ability to shift fluidly between these states, or to hold them in dynamic tension, is what allows the organism to adapt effectively to a changing environment.

The brain’s capacity to determine what is relevant relies on similar tensions. Vervaeke and colleagues identify three such trade-offs — competing goals — that structure how the brain negotiates relevance in real time. Each pair operates in an opponent-processing relationship, where the tension between them helps our perceptual system zero in on relevant input. These sets of competing goals are:

1. Exploration vs. Exploitation: An eternal problem faced by all organisms; Do I keep eating from my current berry patch or leave to find a better one? Do I marry my girlfriend or keep dating? Do I buy the car at the dealership or look for a better deal? A system that optimizes for the dynamic balance between exploration and exploitation is engaging in relevance realization.

2. Specialization vs. Generalization: Do I spend my life learning everything about how to fix a single type of car, or do I become a jack-of-all trades? Do I become a scientific specialist, digging deep within a single field or do I become a generalist who works across disciplinary boundaries? These are just a couple examples of the specialist-generalist tradeoff. The optimal solution to this tradeoff often depends on the stability of your environment. Whereas specialists will tend to thrive in highly stable environments, generalists do better in more volatile situations. If you spend your whole life learning how to catch a single type of fish, and then that fish goes extinct, you’re your specialized knowledge becomes useless overnight. Generalists may not be as efficient, but they adapt more easily.

3. Focusing vs. Diversifying: Do I narrow my attention to a single object, task, or idea—or do I open myself up to a wider field of possibilities? Focusing allows for precision. It helps you finish your essay, hit the target, and notice details that others might miss. But focus comes at a cost: by zeroing in on one thing, you necessarily filter out others. Diversifying, on the other hand, widens the scope. It makes you more likely to notice unexpected opportunities or hidden dangers, but it also increases noise and the risk of distraction. A healthy cognitive system must strike a dynamic balance between focus and openness, tuning itself to the needs of the moment. When the task is clear and the goal well-defined, focus is king. When the situation is uncertain, ambiguous, or novel, diversifying becomes more valuable. This balancing act—between zooming in and zooming out—is another core process by which the brain realizes what is relevant, and filters what is not.

These three tradeoffs all fall under the umbrella of a higher-order tradeoff: Efficiency vs. Resiliency. We must be efficient in our current environment while simultaneously being resilient in the face of environmental changes. These goals are always traded off with each other.

Order, Chaos, and Relevance

The title of part 1 is “Order and Chaos” and relevance realization is also a reflection of this theme. Efficiency is what you need when there is order. If the environment remains stable (and if you expect it to remain stable into the future), you should aim to achieve your goals as quickly and with as little resource-expenditure as you can. When the environment is stable, efficient organisms win.

If the environment is chaotic (or if you expect the environment to become chaotic in the future), your best bet is to sacrifice some efficiency in order to build more generalizable skills and cognitive models, which will necessarily involve more exploration and diversification. When the environment changes rapidly, resilient organisms win.

The Biological Function of Dreams

Predictive processing and the mind-as-hierarchy model has led to a new and compelling theory about the cognitive function of dreams. The neuroscientist Erik Hoel published this theory in a 2021 paper called “The overfitted brain: Dreams evolved to assist generalization”.

Recall that overfitting consists of fitting a model too precisely to the data, such that irrelevant deviations (i.e., noise) are incorporated into the model.

Overfitting is not just a problem in statistics and machine learning. It is a problem for any system that must create generalizable models based on noisy input—which is exactly what our cognitive-perceptual system has to do. We take what we have learned in a particular situation and generalize that learning to many other situations, none of which will be precisely the same as the situation in which the learning occurred.

But how could dreams help us with this task? Hoel points to three phenomenological properties of dreams that he argues are analogous to strategies used within machine learning to avoid overfitting, and which would help us to do the same. These are:

1. “First, the sparseness of dreams in that they are generally less vivid than waking life in that they contain less sensory and conceptual information (i.e., less detail). This lack of detail in dreams is universal, and examples include the blurring of text causing an impossibility of reading, using phones, or calculations.”

2. “Second, the hallucinatory quality of dreams in that they are generally unusual in some way (i.e., not the simple repetition of daily events or specific memories). This includes the fact that in dreams, events and concepts often exist outside of normally strict categories (a person becomes another person, a house a spaceship, and so on).”

3. “Third, the narrative property of dreams, in that dreams in adult humans are generally sequences of events ordered such that they form a narrative, albeit a fabulist one.” (p. 3)

Hoel argues that all of these properties help with overfitting. The lack of detail in dreams is the most obvious because preventing overfitting requires ignoring the details of a situation. The unusual quality of dreams helps with generalization because we need our cognitive models to be capable of generalizing to situations that are outside of the norm. We conjure up unusual situations, Hoel suggests, so that our models can learn to deal with the unusual. Finally, the narrative structure of dreams helps with generalization because narrative allows us to take a set of facts and order them in such a way that we can extract a big picture lesson from them. Stories usually have a “moral”, after all, which means that they are meant to convey some generalizable lesson about how to act in the world. While the “moral” of dreams is not always obvious, it is the case that they usually have a narrative structure.

If Hoel’s theory is correct, this would help explain why REM sleep—and the dreaming that accompanies it—is conserved across many mammalian species. All animals must solve the problem of overfitting, not just humans.

Mythology as Collective Dream

The idea that dreams assist with overfitting leads to an interesting hypothesis about the collective function of mythological narratives. In The Hero with a Thousand Faces, Joseph Campbell said that “Dream is the personalized myth, myth the depersonalized dream…”.

In fact, dreams have the same three phenomenological properties that Hoel pointed out in relation to dreams:

1) Mythological narratives lack detail. Unlike with novels, we know very little about the personalities, habits, or relationships of most mythological characters. Myths are like hyper-condensed stories—all unnecessary details are excluded.

2) Mythological narratives always use unusual concepts that defy normally strict categories. Think about the number of talking animals, half-human creatures (e.g., satyrs, centaurs), people who are made out of the dust of the earth, the world resting on the back of a turtle, dying and resurrecting, having seven heads, etc. Myths are filled with unusual creatures and events— they have an almost hallucinatory quality to them.

3) And, of course, mythological narratives are... narratives.

Could it be, then, that mythological narratives also serve to prevent overfitting and aid with generalization? Hoel thinks they do:

This hypothesized connection explains why humans find the directed dreams we call “fictions” so attractive and also reveals their purpose: they are artificial means of accomplishing the same thing naturally occurring dreams do. Just like dreams, fictions keep us from overfitting our model of the world… There is a sense in which something like the hero myth is actually more true than reality, since it offers a generalizability impossible for any true narrative to possess (Hoel, 2019).

This idea is in accordance with what Jordan Peterson wrote in Maps of Meaning. Peterson argued that over long periods of time, humans have observed people who behave admirably and have told stories about them. We then try to extract the general pattern underlying the behavior of these admirable figures. That general pattern is encoded into a fictional story. Over time, these fictions are distilled until only the most abstract pattern remains. These hyper-condensed fictions are the “hero myths” that are found cross-culturally.

These myths have a general pattern, which is the metamythology (Chapter 1). As Jordan Peterson suggested, the metamythology describes what to do when you don’t know what to do. This is a pattern of action that generalizes to many different situations, thus helping us to avoid overfitting our cognitive models of the world. In this way, the hero myth appears to serve the same function as dreams, at the collective rather than individual level of analysis.

Slope-Chasing and The Will to Power

One of the most important insights to come out of predictive processing literature is that we do not simply seek to reduce or eliminate prediction errors. Instead, we seek to increase the rate at which we are capable of reducing prediction errors—a process sometimes called slope-chasing. Predictive processing theorists have argued that our emotional state—whether we are happy and content or sad and anxious—doesn’t track prediction-error minimization per se, but the rate at which errors are being reduced. A decrease in this rate is accompanied by negative emotion, while an increase is accompanied by positive emotion. Miller and colleagues state that:

Error dynamics — the rate of change in error reduction — are registered by the organism as embodied affective states. We can think of an agent’s performance in reducing error in terms of a slope that plots the various speeds that prediction errors are being accommodated relative to their expectations. Positively and negatively valenced affective states are a reflection of better than or worse than expected error reduction, respectively. Valence refers to the organism’s evaluation of how it is faring in its engagement with the environment (i.e., how well or badly things are going for the organism). (p. 9)

This distinction may seem insignificant at first glance, but it has profound consequences for how we actually behave. In the first place, it dramatically increases the value of exploratory behavior. We will often tolerate—and even seek out—temporary increases in prediction error as long as they help us to increase the global rate of prediction error minimization in the long run. That means we will voluntarily expose ourselves to uncertainty, chaos, and failure so that we can improve our predictive models.

Notably, Miller and colleagues describe this dynamic in the exact language we developed in the first two chapters of this book: that of attractor dynamics and self-organized criticality. They state that:

When a particular niche ceases to yield productive error slopes negative valence signals to the agent that they ought to destroy their own fixed-point attractors in favor of more itinerant wandering policies of exploration. Patterns of effective connectivity emerge and dissolve due to both environmental conditions and changes in our own internal states and behaviours. However, we also have a tendency to actively destroy these attractor states, thereby inducing instabilities and creating [itinerant or wandering] dynamics. Alternatively, when errors accumulate, due to our frequenting spaces where there is an unmanageable complexity or volatility, the negative valence then tunes the agent to fall back on opportunities for action that are already well known and highly reliable. Notice, when all goes well such slope-chasing agents will be constantly moved by their valenced affective states (via changes in error dynamics) towards this edge of criticality, where error is neither too complex nor too easily predicted that the agent no longer has anything to learn. (pp. 13-14)

In other words, we stay in a stable attractor until rates of error minimization begin to decrease, then we begin to explore in order to find a new attractor that increases the rate of error reduction again. If we meet with too much complexity, we fall back to more familiar patterns of behavior. This process keeps us close to criticality, the border between order and chaos (Chapter 2).

Let’s think about what this means in the context of our evolved psychological adaptations (Chapter 3). We have clearly evolved to desire sex. But we don’t just want sex, we want to become more attractive and charismatic so that we are better at getting it (and better at the act itself). Similarly, we don’t just want resources, we want to become more effective and socially powerful so that we are better at attaining resources. We don’t just want social status, we want to become somebody who can continually rise in status throughout our lives.

Each of our drives is aiming to attain its goal—sex, resources, social status, etc.—but more than that, each drive aims to improve its ability to attain the goal. In other words, each drive wants to become more powerful.

It is exactly this dynamic that Nietzsche described as the will to power of each drive that exists within us. The Nietzsche scholar John Richardson comments:

Power is drives’ deepest goal because it has been so strongly and widely selected for: causal dispositions that enhance their activity, that try to (not just maintain but) expand its scope, are those that get most often and firmly fixed in the genetic line. BGE.6: “every drive seeks to rule.” Each, that is, involves a deep effort at “more,” at growth, by extending its control over other forces; mere survival is pursued only as a second-best. This growth is always in the activity that is the drive’s distinguishing and defining goal. So the sex-drive seeks enhancement in sexual activity… This deep aim at power serves, Nietzsche thinks, as a kind of meta-aim that guides the drive’s relation to its more particular goals. (Richardson, 2020 p. 98)

Each drive seeks power, defined as the ability to become better at attaining its defining goals. If we accept that the rate of error reduction is equivalent to power, Nietzsche’s contention that positive and negative affect track perceived power is vindicated by modern cognitive science:

What is happiness? The feeling that power is growing, that resistance is overcome. (The Antichrist, section 2)

I say “perceived” power because we can be mistaken about the rate at which we are reducing prediction error. Some actions, like taking addictive drugs or playing video games, might stimulate the pathways (e.g., dopamine) which signal global prediction error reduction without actually reducing global prediction error. One function of dopamine is to track goal achievement (or prediction error minimization), but dopaminergic pathways can be hijacked by drugs, video games, pornography, and other supernormal stimuli1. This means that positive affect and goal achievement can become detached from actual growth, leading to addiction, depression, mania, or other pathological mental states.

In both cases it’s recognized that the feeling of growth can come apart from the actual growth itself. Miller and colleagues discuss this fact in the context of addiction, which simulates a growing rate of prediction-error minimization without actually doing so. Addictive substances trick our brain into thinking that we are getting really good at minimizing prediction error, and therefore they feel really good, at least for a while.

Nietzsche associated happiness with the feeling that power is growing. Miller and colleagues (2021) associate happiness with perceived increases in the rate at which prediction error is being reduced. Properly understood, these claims are equivalent. As for Nietzsche, this was an important aspect of his arguments against hedonism and utilitarianism. Richardson (2020) states:

The point is important for Nietzsche. It contributes to his arguments against hedonism and utilitarianism, both of which mistake the end as a mere feeling— pleasure or happiness—and fail to see the judgment it involves as to power (growth). The end is that power, and the feeling is a way of judging that the end is achieved: “‘it [everything living] strives for power, for more in power’—pleasure is only a symptom of the feeling of achieved power, a difference-consciousness.” But since the judgment involved in this enjoyment can be false, the feeling can be deceptive, the end not really achieved. Thus the feeling of growth can come apart from growth itself. (Richardson, 2020 pp. 59-60)

For Nietzsche, the goal is to actually achieve the underlying growth that positive affect is meant to track. The goal is power, not the mere feeling of power. And power should not be understood as a steady state, but as an ongoing process. Richardson (2020) states:

…we need to bear in mind that power is not a goal in the most usual sense since it includes a “movement” within it. To be sure, Nietzsche sometimes does speak of a “growth in power,” but I take this as a loose way to remind us that power is itself a growth. It is not a (steady) state or condition, as we usually think a “goal” to be. It is not even the (settled) condition of having grown in control, but rather the process of growing in control. Life’s deep aim is to change in a certain direction, and not to arrive at some point or position in that direction. (Richardson, 2020 p. 61)

This means that, according to Nietzsche, life’s deep aim, and the highest goal we can aspire to, is best understood as a process and not a state. In predictive processing terms, it is the process by which we increase the rate of global error reduction. The ability to increase this rate can be understood simply as power. John Vervaeke and colleagues’ description of relevance realization describes the process by which we become more cognitively powerful. Jordan Peterson’s metamythology shows how we have used narrative to intuitively grasp that process before we had a scientific or philosophical understanding of it.

In Sum

Here is the territory we’ve covered in this chapter:

1. The brain/mind is best understood as a hierarchical control system which aims to reduce prediction error at every level of the hierarchy.

2. Prediction errors at low levels of the hierarchy produce small amounts of psychological entropy — we can easily change our predictions to accommodate them. Prediction errors at the top of the hierarchy produce massive amounts of psychological entropy — changing predictions to accommodate them is extremely chaotic and dangerous. There can be serious psychological and sociological consequences for messing with high-level beliefs and values.

3. For this reason, people protect their high-level beliefs and values from threats — denial of information that threatens them, and punishment of the people who carry that information are not uncommon responses to these kinds of threats.

4. Our perceptual system determines which errors deserve our attention through a set of opponent processing relationships which can be summarized as the trade-off between efficiency and resiliency. Tying this back to the theme of part 1, this is really a trade-off between what is optimal in a state of order (efficiency) and what is optimal in a state of chaos (resiliency).

5. Overfitting is a constant problem for any predictive model that must take what it learns in particular situations and generalize that learning to novel situations. Dreams may have evolved to help us avoid overfitting our predictive models. Given that mythological narratives have the same phenomenological properties as dreams, they may be a collective strategy to assist with generalization.

6. We do not just seek to reduce prediction error, but to increase the rate of prediction error minimization. Our emotional state tracks perceived changes in the rate of global error reduction. Nietzsche intuitively understood this, and it was a core aspect of his will to power thesis.

In the next chapter we will look at individual differences in perception and cognition that correspond to the tradeoffs inherent to relevance realization. Some of us are efficient specialists while others are more resilient generalists. Both types have strengths and weaknesses that we can learn to harness and compensate for.

Dopamine doesn’t directly produce positive emotion. It’s more involved in wanting (motivational salience) than in liking (hedonic pleasure). Still, achieving a dopamine-mediated goal often triggers positive emotion via other neural systems—which is why using addictive drugs usually feels great… right up until it doesn’t.

Really helpful explanations—helps me firmly conceptualize the predictive processing in a more concrete manner. I wonder if you would further expound your model of hierarchical mind using Mike Levin framework of scale free intelligence where the Narrative Self is nested within ever larger framework of Selves.

❤️🔥☦️❤️🔥